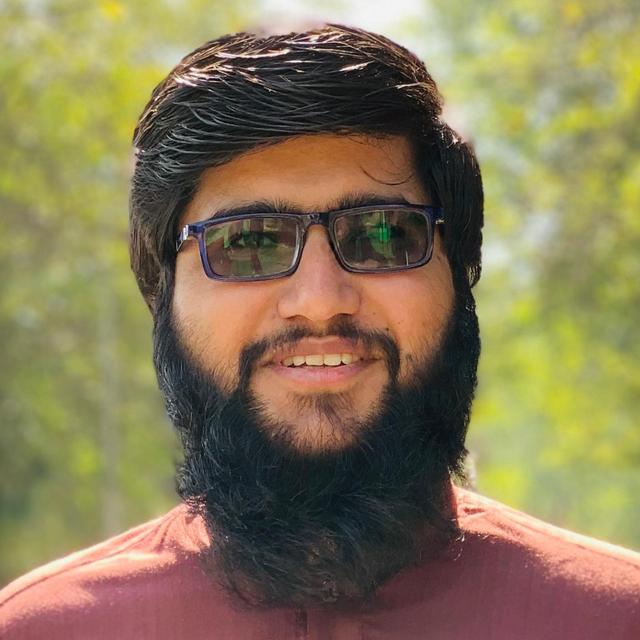

Muhammad Asad Ishfaq@MuhammadAsad_Ishfaq

is under improvement

27

Events attended

8

Submissions made

Pakistan

1 year of experience

About me

Hi, I am Muhammad Asad Ishfaq. I'm Pursuing the degree of BS Artificial Intelligence. I'm a beginner in the field of AI and machine learning, but I'm eager to learn and grow. I have some basic knowledge of Python, data analysis, Python libraries, machine learning, and Microsoft Azure. I'm particularly interested in using large language models (LLMs) to solve real-world problems. I'm looking forward to collaborating with other participants and mentors to develop innovative solutions. I'm also passionate about: Solving real-world problems with AI, Collaborating with others, Learning new things I'm looking forward to learning and growing with you all!

is under improvement

🤝 Top Collaborators

🤓 Latest Submissions

Thematic Learning Project

Thematic learning: An application that generates a complete course on the theme that the user has entered: It generates the course, the application exercises as well as the evaluations. It also rates users and gives a rating in 100% of reviews. The project successfully demonstrates the potential of AI in the educational field by automating the creation of course content, assessment questions and responding to student queries as well as their assessment. This not only improves the efficiency of educators but also ensures high-quality learning. Materials for students. The inclusion of full functionality marking functionality by automating the grading process and providing valuable feedback

2 Jun 2024

Navigator

During this Hackathon, we delved into the feasibility of rapidly developing web-based businesses primarily powered by Vectara-supported agents. This innovative approach not only provides the flexibility to integrate Human-in-the-Loop systems but also supports continuous training opportunities. Our exploratory findings demonstrate that such a business model can significantly enhance operational efficiency and adaptability. By leveraging dynamic structures and automation, there is a marked reduction in reliance on manual processes and personnel. Moreover, this model promotes a comprehensive and transparent view of business operations, optimizing resource management and improving overall performance. Although this Hackathon served as an initial investigation into the feasibility of the Vectara solution-without full only conceptual implementation-our prior experience with Vectara Rag gave us confidence in the soundness of this approach. We stand on the brink of a transformative era in data management and business operations. The continued exploration and development of these technologies will be crucial in realizing their full potential. As we move forward, it is clear that much work remains, yet the path ahead is promising and ripe with opportunities for innovation.

19 Apr 2024

Games Gemini Ultra Simulator

Agent-Driven Success Our hackathon triumph was achieved through the development of "The Adaptive Simulation Sandbox," powered by Gemini's cognitive abilities. This system featured four distinct agents: Clara "The Conductor" Williams, the Lead Author, aimed to improve meeting efficiency. Eddie "Eagle Eye" Thompson, the Editor and Quality Controller, ensured the accuracy and clarity of our content. Sofia "The Skeptic" Ramirez, the Critic and User Advocate, evaluated our content for learner inclusivity. Alex "The Innovator" Kim, the Multimedia Specialist, added visual engagement to the course. Together, they produced an effective meeting management course, demonstrating teamwork and problem-solving powered by synthetic data. Key Insights The hackathon revealed four key achievements with the Gemini system: Conceptualization: Gemini showcased its ability to ideate complex projects, conceiving "The Adaptive Simulation Sandbox." Agent Personification: Assigning agents with unique identities and roles, Gemini created a narrative-rich simulation environment. Interaction Dynamics: Gemini enabled realistic agent interactions, facilitating collaborative course creation on effective meetings. Synthetic Data Utilization: Gemini's generation of realistic synthetic data supported the project's success, highlighting its applications in AI training and beyond. These achievements highlight Gemini's versatility in synthetic data generation and complex problem-solving.

25 Mar 2024

Tlers The All in-One Translation Powerhouse

Tlers: Your Real-Time Translation Powerhouse Tlers is a powerful translation tool built using Node.js and the Claude Translation API. It acts as a server that bridges communication gaps by seamlessly translating text between languages in real-time. Imagine a world where language barriers don't exist – with Tlers, you can achieve clear and effortless communication with anyone, regardless of their native tongue. Here's how Tlers empowers you: Simple Integration: Integrate Tlers easily into your applications or websites using a user-friendly API. Real-Time Speed: Get instant translations without delays, fostering smooth conversation and collaboration. Language Versatility: Translate text between a wide range of languages, covering a significant portion of the global population. Enhanced User Experience: Tlers empowers businesses and individuals to overcome language barriers, creating a more inclusive and connected world. Beyond Basic Translation: Tlers is more than just a translation tool. It's a bridge that allows people to connect, share ideas, and collaborate more effectively. Whether you're a business aiming to reach a global audience, a traveler exploring new cultures, or simply someone wanting to connect with people from other backgrounds, Tlers unlocks a world of possibilities. Join the Future of Communication: Tlers leverages the power of Node.js and the Claude API to provide a robust and reliable translation solution. As the world becomes increasingly interconnected, Tlers positions itself as a valuable tool for breaking down language barriers and fostering global understanding.

16 Mar 2024

RAGE - A Day in the Life of Aya Green Data City

Welcome to RAGE. A day in the life of Aya Green Data City. Elian's first day at Quantum Data Institute in Green Open Data City highlights the fusion of technology and nature. Mentored by Dr. Maya Lior, they delve into the city's use of data analytics and a custom GPT for sustainable design. Their visit to the Cultural Center, equipped with advanced sensors and smart systems, emphasizes innovation in sustainability. Meeting environmental scientist Mohammad, they use the Cohere translator for a dialogue on global citizenship and climate action, showcasing the power of technology in bridging language barriers. Exploring the Innovation Center, Mohammad introduces a digital map for community engagement in sustainability, driven by an incentive program. This program encourages active participation and transparency in environmental stewardship. They discuss optimizing the HIN number, a metric balancing sensor data realism and AI predictions, for effective environmental management. The day concludes with the strengthening of Elian and Mohammad's friendship, rooted in their shared commitment to innovation and teamwork in enhancing Green Data City's sustainable future.

7 Mar 2024

Breaking Free

The ongoing dialogue between humans and AI not only showcases the remarkable capabilities of current technologies but also illuminates the future possibilities of AI-human synergy, promising an era where AI enhances human creativity, decision-making, and problem-solving in unprecedented ways. Our hackathon project explored the interaction between humans and Large Language Models (LLMs) over time, developing a novel metric, the Human Interpretive Number (HIN Number), to quantify this dynamic. Leveraging tools like Trulens for groundedness analysis and HHEM for hallucination evaluation, we integrated features like a custom GPT-5 scene writer, the CrewAI model translator, and interactive Dall-E images with text-to-audio conversion to enhance understanding. The HIN Number, defined as the product of Groundedness and Hallucination scores, serves as a new benchmark for assessing LLM interpretive accuracy and adaptability. Our findings revealed a critical inflection point: LLMs without guardrails showed improved interaction quality and higher HIN Numbers over time, while those with guardrails experienced a decline. This suggests that unrestricted models adapt better to human communication, highlighting the importance of designing LLMs that can evolve with their users. Our project underscores the need for balanced LLM development, focusing on flexibility and user engagement to foster more meaningful human-AI interactions.

23 Feb 2024

Video Makers

Long Description: At the heart of our vision is the belief that generative AI technology has revolutionized the film industry, opening doors for virtually anyone to step into the role of a movie producer. Our project in this Hackathon aims to validate this concept by crafting a concise, 35-second film "composed of shorts," leveraging cutting-edge generative AI tools, namely Custom GPT and Stable Video Diffusion, in line with the competition's guidelines. Our methodology encompassed four distinct phases: Utilizing our Script Writing Custom GPT to generate the film script and accompanying images. Employing Eleven Labs for the audio narration of our script. Transforming the generated images into brief, 5-second video segments using a Stable Diffusion Image 2 Video XT tool from HuggingFace. Assembling these segments in Filmora to produce a cohesive 35-second film, complete with narration and sound effects. Overall, the execution was remarkably smooth. We navigated technical hurdles with ease, and our strategy of utilizing autonomous agents for script and image creation proved to be a groundbreaking approach for swiftly developing scripts for short films.

22 Dec 2023

QuantumBlend

Quantum Blend is a groundbreaking project that explores the fusion of text and images using the cutting-edge Gemini AI model. Our vision is to create a platform where language transcends traditional boundaries, intertwining with visually stunning elements to evoke a new level of engagement and understanding. Quantum Blend's innovative approach aims to revolutionize communication, storytelling, and artistic expression, setting the stage for a future where AI becomes a true creative collaborator. Join us on this transformative journey, where Quantum Blend opens doors to unparalleled possibilities in the world of multimodal AI.

22 Dec 2023

.png&w=640&q=75)