🤝 Top Collabolators

🤓 Latest Submissions

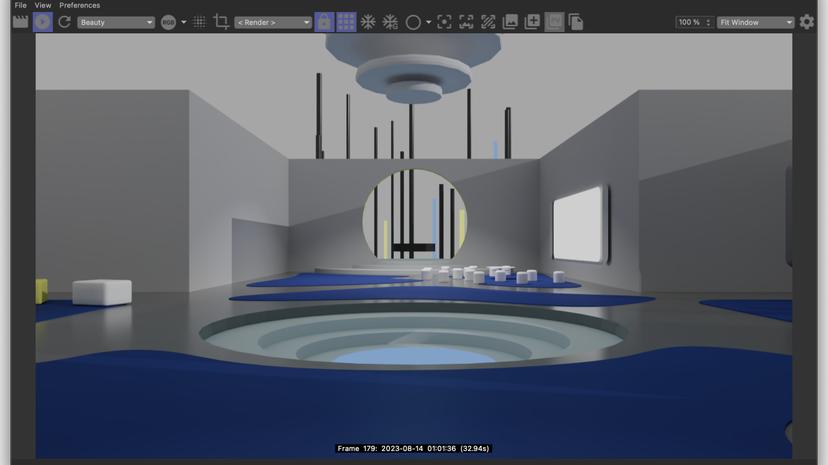

Smart Decisions with AI and VR

It's been known for a while that when people discuss important decisions, using visual aids and physical props, it tangibly enhances the team's creativity and ability to focus on the most significant aspects of the decision at hand. Conducting this exercise in a fun, unfamiliar environment makes the whole process even more conducive to creative, out-of-the-box thinking. This idea is behind the setup of many design-thinking and ideation rooms across the world. This 3D-AI hackathon gave us the opportunity to explore the idea further. Our team came up with a concrete business scenario, and an ideal workflow to support it. Although our final implementation fell a bit short of this grand vision, the exercise allowed us to (1) get a deeper understanding of the value of generative ai for purpose-driven decision making (2) get an appreciation of where the tech is today and how it is evolving to effectively support that process and (3) learn a lot, laugh a a bunch, and really come together as a team. In the end we were 13 people from 4 continents and 6 countries, who for the most had never met nor worked together at the beginning of the challenge.

14 Aug 2023

Exponential workflows with STS

By now most people have experienced one-human-to-one-agent collaborations, thanks to the unprecedented success of ChatGPT. Today emerging technologies like superAGI and LangChain enable agent-to-agent collaboration. Our aim is to unleash the power of exponential workflows by enabling dynamic and real-time collaboration between multiple humans and multiple autonomous agents. We used as a background a concrete business application: a team of business and IT professionals are working together with autonomous agents to prepare a list of topics for consideration at the next board meeting of a mining operation. In our scenario, human operators start asking multiple agents for assistance in preparing this report. As content gets added, vetted, and integrated by the humans, the agents have more and more information at their disposal to enrich and contextualize their responses

21 Aug 2023

Climate Impact with STS

We've combined our cognitive architecture system with the weaviate and cohere technologies to provide an immersive experience where use can focus on the task at-hand rather having to wrestle with the intricacies of prompt engineering. We started with a collection of climate-action reference documents, which we summarized with cohere and loaded into the weaviate database to experiment with various querying and reranking options. We then integrated the results into the STS42 platform where users can interact with the documents, through the Cohere chat api. The result is a back-and-forth collaboration where the human controls which of the LLM answers and suggestions are to be considered in for the follow-up questoins

18 Nov 2023

.png&w=256&q=75)