Stable Diffusion tutorial: Prompt Inpainting with Stable Diffusion

What is InPainting?

Image inpainting is an active area of AI research where AI has been able to come up with better inpainting results than most artists.

It's a way of producing images where the missing parts have been filled with both visually and semantically plausible content. It can be quite useful for many applications like advertisements, improving your future Instagram post, edit & fix your AI generated images and it can even be used to repair old photos. There are many ways to perform inpainting, but the most common method is to use a convolutional neural network (CNN).

A CNN is well suited for inpainting because it can learn the features of the image and can fill in the missing content using these features and there are many different CNN architectures that can be used for this.

Short introduction to Stable Diffusion

Stable Diffusion is a latent text-to-image diffusion model capable of generating stylized and photo-realistic images. It is pre-trained on a subset of the LAION-5B dataset and the model can be run at home on a consumer grade graphics card, so everyone can create stunning art within seconds.

How to do Inpainting with Stable Diffusion

This tutorial helps you to do prompt-based inpainting without having to paint the mask - using Stable Diffusion and Clipseg. A mask in this case is a binary image that tells the model which part of the image to inpaint and which part to keep. A further requirement is that you need a good GPU, but it also runs fine on Google Colab Tesla T4.

It takes 3 mandatory inputs to perform InPainting.

- Input Image URL

- Prompt of the part in the input image that you want to replace

- Output Prompt

There are certain parameters that you can tune

- Mask Precision

- Stable Diffusion Generation Strength

If you are using Stable Diffusion from Hugging Face 🤗 for the first time, You need to accept ToS on the Model Page and get your Token from your user profile

So let's get started!

Install open source Git extension for versioning large files

! git lfs install

Clone the clipseg repository

! git clone https://github.com/timojl/clipseg

Install diffusers package from PyPi

! pip install diffusers -q

Install some more helpers

! pip install transformers -q -UU ftfy gradio

Install CLIP with pip

! pip install git+https://github.com/openai/CLIP.git -q

Now we move on to logging in with Hugging Face. For this simply run the following command:

from huggingface_hub import notebook_login

notebook_login()

After the login process is complete, you will see the following output:

Login successful

Your token has been saved to /root/.huggingface/token

%cd clipseg

! ls

datasets metrics.py supplementary.pdf

environment.yml models Tables.ipynb

evaluation_utils.py overview.png training.py

example_image.jpg Quickstart.ipynb Visual_Feature_Engineering.ipynb

experiments Readme.md weights

general_utils.py score.py

LICENSE setup.py

import torch

import requests

import cv2

from models.clipseg import CLIPDensePredT

from PIL import Image

from torchvision import transforms

from matplotlib import pyplot as plt

from io import BytesIO

from torch import autocast

import requests

import PIL

import torch

from diffusers import StableDiffusionInpaintPipeline as StableDiffusionInpaintPipeline

Load the model

model = CLIPDensePredT(version='ViT-B/16', reduce_dim=64)

model.eval();

model.load_state_dict(torch.load('/content/clipseg/weights/rd64-uni.pth', map_location=torch.device('cuda')), strict=False);

Non-strict, because we only stored decoder weights (not CLIP weights)

device = "cuda"

pipe = StableDiffusionInpaintPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="fp16",

torch_dtype=torch.float16,

use_auth_token=True

).to(device)

Alternatively you can load an Image from an external URL like this:

image_url = 'https://okmagazine.ge/wp-content/uploads/2021/04/00-promo-rob-pattison-1024x1024.jpg'

input_image = Image.open(requests.get(image_url, stream=True).raw)

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

transforms.Resize((512, 512)),

])

img = transform(input_image).unsqueeze(0)

Move back in directories

%cd ..

Convert the input image

input_image.convert("RGB").resize((512, 512)).save("init_image.png", "PNG")

Display the image with the help of plt

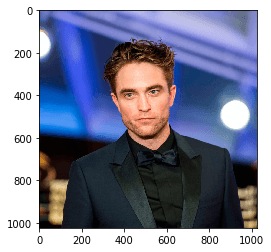

from matplotlib import pyplot as plt

plt.imshow(input_image, interpolation='nearest')

plt.show()

This will show the following image:

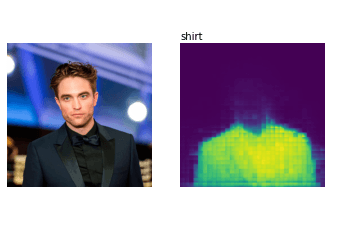

Now we will define a prompt for our mask, then predict and then visualize the prediction:

prompts = ['shirt']

with torch.no_grad():

preds = model(img.repeat(len(prompts),1,1,1), prompts)[0]

_, ax = plt.subplots(1, 5, figsize=(15, 4))

[a.axis('off') for a in ax.flatten()]

ax[0].imshow(input_image)

[ax[i+1].imshow(torch.sigmoid(preds[i][0])) for i in range(len(prompts))];

[ax[i+1].text(0, -15, prompts[i]) for i in range(len(prompts))];

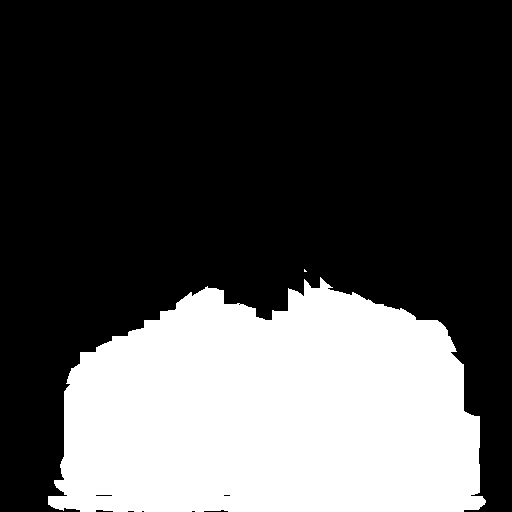

Now we have to convert this mask into a binary image and save it as PNG file:

filename = f"mask.png"

plt.imsave(filename,torch.sigmoid(preds[0][0]))

img2 = cv2.imread(filename)

gray_image = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

(thresh, bw_image) = cv2.threshold(gray_image, 100, 255, cv2.THRESH_BINARY)

# For debugging only:

cv2.imwrite(filename,bw_image)

# fix color format

cv2.cvtColor(bw_image, cv2.COLOR_BGR2RGB)

Image.fromarray(bw_image)

Now we have a mask that looks like this:

Now load the input image and the created mask

init_image = Image.open('init_image.png')

mask = Image.open('mask.png')

And finally the last step: Inpainting with a prompt of your choice. Depending on your hardware, this will take a few seconds.

with autocast("cuda"):

images = pipe(prompt="a yellow flowered holiday shirt", init_image=init_image, mask_image=mask, strength=0.8)["sample"]

On Google Colab you can print out the image by just typing its name:

images[0]

Now you will see that the shirt we created a mask for got replaced with our new prompt! 🎉

Thank you! If you enjoyed this tutorial you can find more and continue reading on our tutorial page - Fabian Stehle, Data Science Intern at New Native