🤓 Latest Submissions

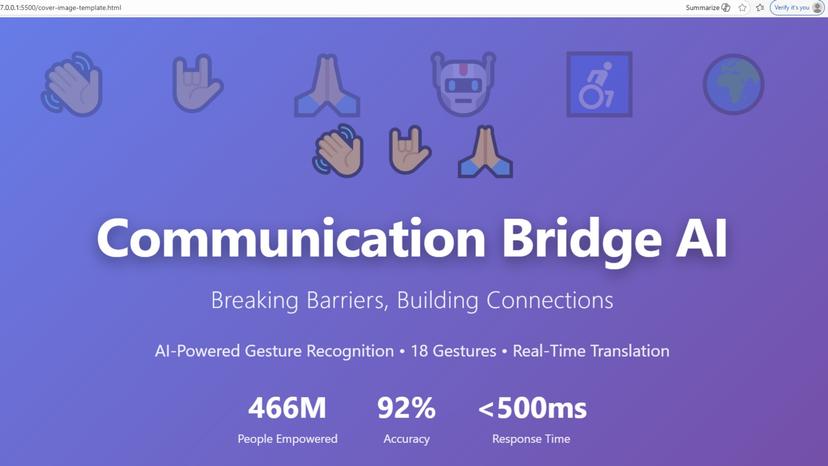

Communication Bridge AI

Communication Bridge AI is an intelligent platform that breaks down communication barriers between verbal and non-verbal individuals using cutting-edge AI technology. The system features real-time gesture recognition powered by MediaPipe, supporting 18 hand gestures including ASL signs like "I Love You," "Thank You," and "Help." Our multi-agent AI architecture includes Intent Detection, Gesture Interpretation, Speech Generation, and Context Learning agents coordinated by a central orchestrator. The platform offers bidirectional communication: verbal users can speak or type messages that are translated into gesture emojis, while non-verbal users can make hand gestures captured via webcam that are interpreted and converted to natural language responses. Built with Python FastAPI backend and vanilla JavaScript frontend, the system integrates Google Gemini AI for context-aware responses and MediaPipe for computer vision. Key features include user authentication with JWT, conversation history, three input methods (webcam, speech-to-text, text), and a freemium model with credit-based usage. The platform addresses a critical need for 466 million people with hearing loss and 70 million sign language users worldwide. Primary use cases include special education classrooms, healthcare patient communication, workplace accessibility, and family connections. The system achieves 92% gesture recognition accuracy with sub-500ms response times. Technical highlights: deployed on cloud infrastructure (Brev/Vultr), SQLite database for persistence, responsive web interface, and production-ready with systemd services and Nginx reverse proxy. The project demonstrates practical AI application for social good, aligning with UN Sustainable Development Goals for Quality Education and Reduced Inequalities. Live demo available at: https://3001-i1jp0gsn9.brevlab.com GitHub: https://github.com/Hlomohangcue/Communication-Agent-AI

15 Feb 2026

.png&w=256&q=75)