Top Builders

Explore the top contributors showcasing the highest number of app submissions within our community.

GPT-4V(ision)

Discover the groundbreaking integration of GPT-4 Vision, an innovative addition to the GPT-4 series. Witness AI's transformative leap into the visual realm, elevating its capabilities across diverse domains.

| General | |

|---|---|

| Release date | September 25, 2023 |

| Author | OpenAI |

| Documentation | OpenAI's Guide |

| Type | AI Model with Visual Understanding |

Overview

GPT-4 Vision seamlessly integrates visual interpretation into the GPT-4 framework, expanding the model's capabilities beyond language understanding. It empowers AI to process diverse visual data alongside textual inputs.

Visionary Integration

GPT-4 Vision blends language reasoning with image analysis, introducing unparalleled capabilities to AI systems.

Capabilities

Discover the transformative abilities of GPT-4 Vision across various domains and tasks:

1. Visual Understanding

Object Detection

Accurate identification and analysis of objects within images, showcasing proficiency in comprehensive image understanding.

Visual Question Answering

Adept handling of follow-up questions based on visual prompts, offering insightful information and suggestions.

2. Multifaceted Processing

Multiple Condition Processing

Interpreting and responding to multiple instructions simultaneously, demonstrating versatility in handling complex queries.

Data Analysis

Enhanced data comprehension and analysis, providing valuable insights when presented with visual data, including graphs and charts.

3. Language and Visual Fusion

Text Deciphering

Proficiency in deciphering handwritten notes and challenging text, maintaining high accuracy even in difficult scenarios.

Addressing Challenges

Mitigating Limitations

While pioneering in vision integration, GPT-4 faces inherent challenges:

- Reliability Issues: Occasional inaccuracies or hallucinations in visual interpretations.

- Overreliance Concerns: Potential for users to overly trust inaccurate responses.

- Complex Reasoning: Challenges in nuanced, multifaceted visual tasks.

Safety Measures

OpenAI implements safety measures, including safety reward signals during training and reinforcement learning, to mitigate risks associated with inaccurate or unsafe outputs.

GPT-4 Vision Resources

Explore GPT-4 Vision's detailed documentation and quick start guides for insights, usage guidelines, and safety measures:

- Official Documentation: GPT-4 Vision Documentation

- Quick Start Guide: GPT-4 Vision Quick Start

- GPT-4Vision system card

GPT-4 Vision Tutorials

👉 Discover more GPT-4 Vision Tutorials on lablab.ai

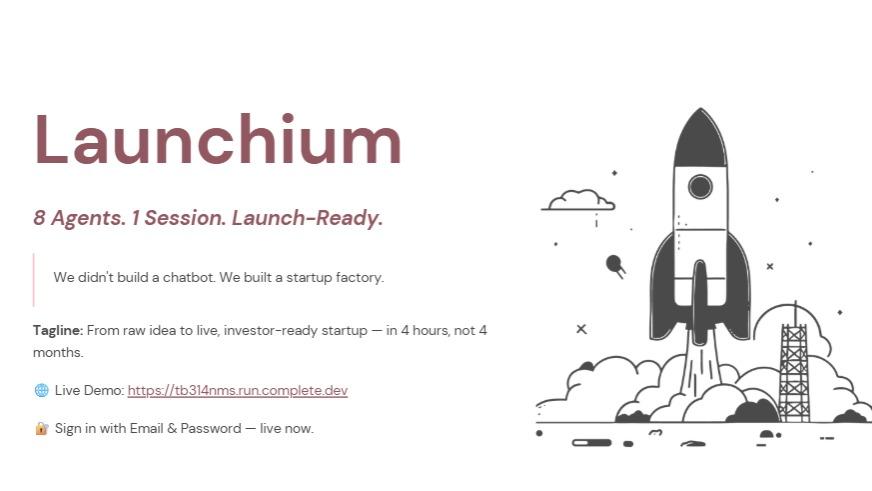

OpenAI GPT-4 Vision AI technology Hackathon projects

Discover innovative solutions crafted with OpenAI GPT-4 Vision AI technology, developed by our community members during our engaging hackathons.

.png&w=3840&q=75)