Top Builders

Explore the top contributors showcasing the highest number of app submissions within our community.

DeepSeek V3

| General | |

|---|---|

| Release date | 2024 |

| Author | DeepSeek |

| Website | DeepSeek Models |

| Repository | https://github.com/deepseek-ai |

| Type | MoE (Mixture of Experts) Language Models |

The DeepSeek V3 model represents our most advanced AI architecture, designed for complex reasoning tasks and code generation. With enhanced context handling and improved instruction following, this model excels in technical applications and enterprise deployments.

Key Features

- DeepSeek-V3: 671B parameters (37B activated per token), optimized for math, code, and multilingual tasks.

- Code Generation: Supports 12+ programming languages

- Advanced Reasoning: Chain-of-thought capabilities for multi-step problems

- Enterprise-Grade Security: Built-in content filtering and compliance features

- Speed: 3x faster generation than previous versions (60 TPS)

- Open-Source: FP8/BF16 weights available on Hugging Face

Usefull Links

👉 Local Deployment Guide for DeepSeek V3 👉 Model Weights on Hugging Face 👉 API Documentation 👉 Deepseek V3 Paper 👉 Performance Highlights

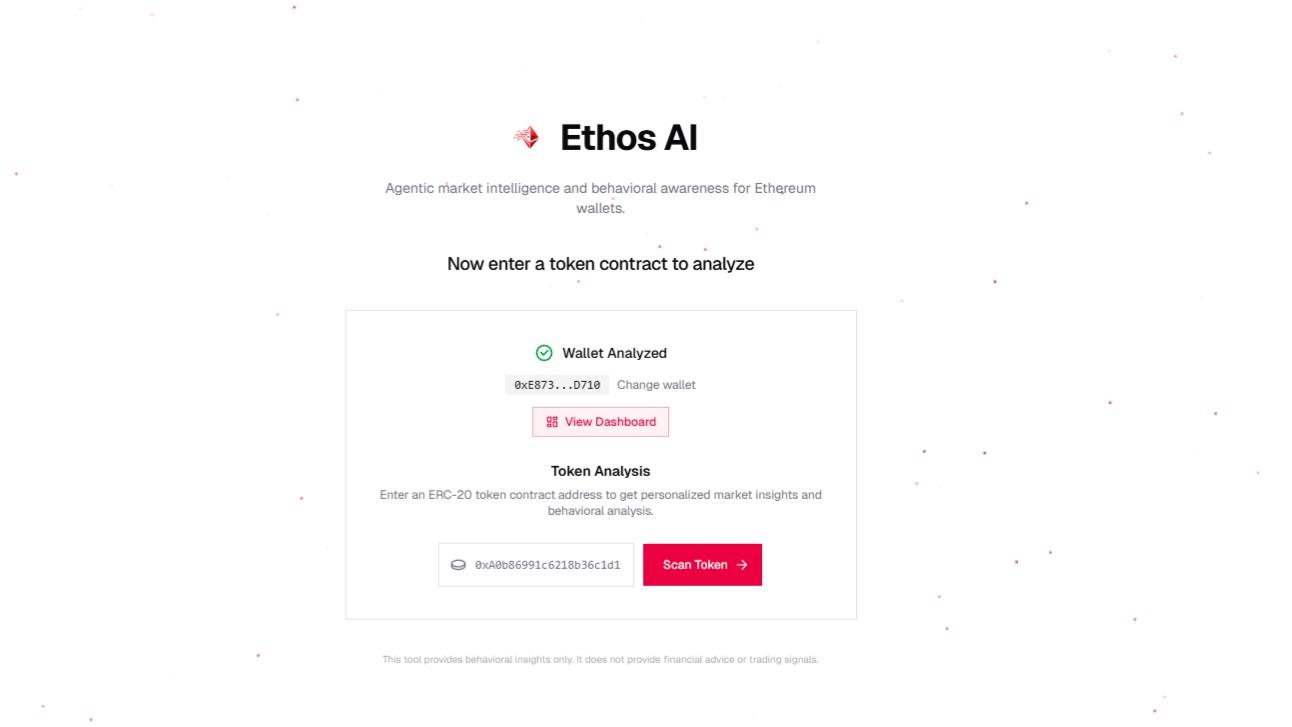

Deepseek DeepSeek V3 AI technology Hackathon projects

Discover innovative solutions crafted with Deepseek DeepSeek V3 AI technology, developed by our community members during our engaging hackathons.