.png&w=256&q=75)

Sharan Nayak@labsharan

is under improvement

1

Events attended

1

Submissions made

1 year of experience

About me

I am a roboticist and engineer with a Ph.D. in Aerospace Engineering from the University of Maryland and a Master’s in Electrical and Computer Engineering from Georgia Tech. I have previously held researcher and sofware engineer roles at SpaceX, NASA JPL, Johns Hopkins APL, and Garmin. Across these experiences, I’ve built real-time embedded software, designed algorithms for multi-robot exploration and motion planning, and tackled challenging problems at the intersection of robotics and AI. My technical toolkit includes C++, Python, ROS, embedded systems, and deep learning.

is under improvement

🤝 Top Collaborators

🤓 Latest Submissions

Data infrastructure for Real World Robot Training

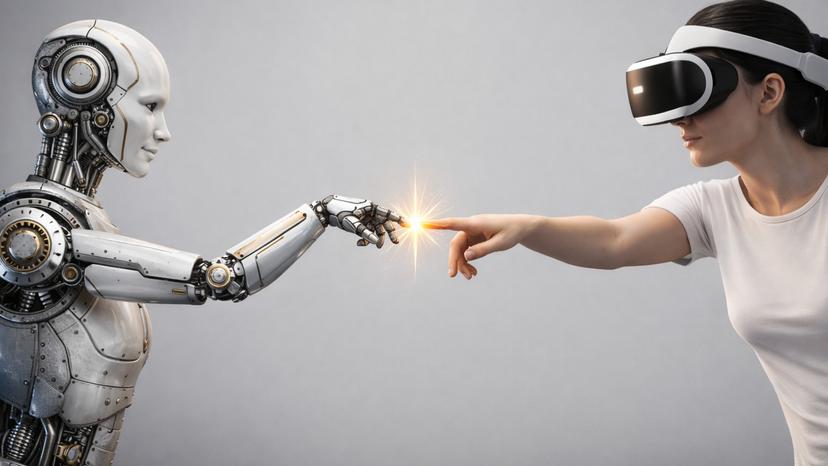

Robots fail outside the lab because their training data doesn’t transfer. We’re building a data infrastructure platform that converts real-world human demonstrations into reusable robot training data using consumer AR/VR headsets and guided capture workflows. Each demonstration is validated and enriched in a closed loop with action annotations, occlusion handling, and simulation-based verification. Instead of storing isolated trajectories, we decompose demonstrations into spatially and temporally structured atomic actions — reusable skill patterns that generalize across tasks and robots. The resulting skills are exported in industry-standard formats (LeRobot, ManiSkill, Isaac Sim) and can be retargeted across robot embodiments without custom engineering. Because XR devices are widely available, data collection can scale beyond robotics labs to everyday users.

15 Feb 2026

.png&w=640&q=75)