Kenn Tinio@kenn107

is under improvement

1

Events attended

1

Submissions made

10+ years of experience

About me

Full-stack software engineer with 9+ years of experience building scalable web applications and APIs. Passionate about AI, startups, and rapid prototyping through hackathons. Enjoys turning ideas into working products under tight timelines and collaborating with diverse, global teams.

is under improvement

🤓 Latest Submissions

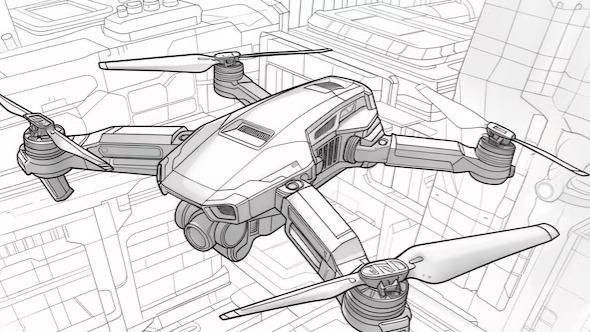

NeuroPilot

People who could benefit from hands-free control, for example those with limited mobility, often cannot access it. NeuroPilot changes that: open-source BCI middleware that turns a Muse 2 headband into a real control interface. Muse 2 is already connected to the app, and we have a working integration with DJI Tello. Users train in a 3D simulation and in a training environment; when their brainwave pattern matches a chosen action (move left, right, up, down), the backend fires configurable webhooks or WebSockets. We accumulate 5 actions from the headband; YOLOv8 runs on the Tello camera feed to detect obstacles and Tello state. That batch and the vision data are sent to Gemini, which decides the movement. Every command sent to the DJI Tello is the result of an AI API call, so BCI intent is refined by vision and reasoning before it reaches the drone. The stack is software-only and simulation-first: a Next.js frontend, a FastAPI + WebSocket backend, a web Lab (Three.js), a machine/Connect dashboard for status and live feedback, and per-control webhook URLs. No lab hardware or locked-in vendors. Teams can integrate with existing sims, digital twins, or robots via HTTP.

15 Feb 2026