🤓 Latest Submissions

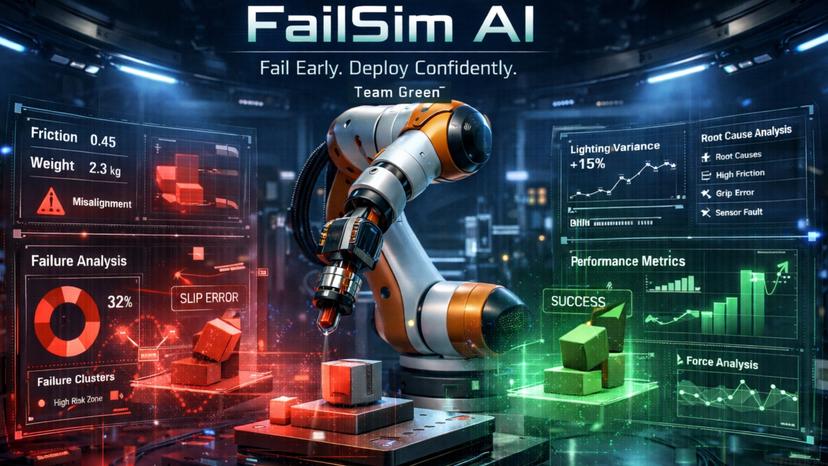

FailSim AI - Multi-Robot Failure Analysis Platform

FailSim AI is a multi-robot failure analysis platform that helps robotics companies discover why their robots fail before building physical prototypes. Instead of weeks of manual testing, teams can run 1000+ physics-based simulations overnight using PyBullet with real robot specifications (Kuka iiwa7, Franka Panda, UR5, or custom robots). Our platform uses Gemini 3 Flash AI to automatically analyze failure clusters and explain the physical mechanisms causing failures. The system provides interactive features including experiment configuration, real-time 5-frame simulation visualization, failure distribution charts, and click-to-analyze AI insights for individual runs. Built on Vultr infrastructure with a React dashboard, FastAPI backend, and deployed 24/7 at http://80.240.20.49. FailSim AI reduces testing time by 10x, cuts costs by 90%, and enables teams to fix issues in simulation rather than expensive hardware iterations. Perfect for robotics startups, QA teams, and research labs validating designs before real-world deployment.

15 Feb 2026

.png&w=828&q=75)

HotelSys

HotelSys/CryptoStay es un MVP de gestión hotelera y pagos híbridos que integra un backend Web2 con base de datos MySQL y una capa Web3 para cobros con criptomonedas. En la parte Web2, el sistema permite administrar el hotel: crear y editar habitaciones (tipo, descripción, precio en COP), subir y asociar fotos, controlar estados operativos (disponible/ocupada/limpieza) y gestionar reservas (creación, consulta, cancelación y seguimiento del estado de pago). El acceso se maneja por roles (administrador, gerente, aseo y huésped), lo que define qué funcionalidades ve cada usuario. Para el huésped, la aplicación incluye un panel con “IA-lite” que aprende gustos de forma simple: el usuario puede indicar preferencias (tipo de viaje, presupuesto, tipo de habitación y amenidades) y el sistema también registra señales de comportamiento como las habitaciones que consulta. Con estos datos, se generan recomendaciones personalizadas con razones claras (por ejemplo, “Habitación destacada”, “Coincide con tu presupuesto” o “Has visto esta habitación varias veces”) para mejorar la conversión. En la capa Web3, el pago se realiza con MetaMask sobre una red local (Hardhat) usando ETH o un token de prueba tipo USDC. El flujo recomendado es: primero se crea una reserva “pendiente” en Web2 y luego se paga en Web3 indicando el ID de la reserva. Tras la transacción, el sistema captura el txHash, consulta el receipt en el RPC local y verifica que exista el evento de pago correspondiente a esa reserva en el contrato. Si la verificación es correcta, la reserva se marca como “pagada” en MySQL y el txHash queda guardado como evidencia auditable. Esta arquitectura demuestra cómo un sistema tradicional puede incorporar cobros en blockchain manteniendo control, trazabilidad y experiencia de usuario.

28 Feb 2026

AMRRA

One of the biggest challenges in modern research is dealing with information overload. Analysts, researchers, and organizations often need to process vast amounts of unstructured data—ranging from academic papers and PDFs to dynamic web content. Manually filtering, extracting, and validating insights is time-consuming, prone to bias, and difficult to scale. Our project addresses this gap by building a mini AI research lab, a system that combines retrieval, structured extraction, experimentation, and judgment. At its core, we use GPT-5 efficiently as a multi-role reasoning agent rather than just a conversational assistant. For example, GPT-5 powers the Extractor, transforming raw text into structured evidence with minimal noise. The Experimenter module leverages GPT-5 to simulate hypotheses, cross-check facts, and test consistency across different sources. Finally, the Judge role allows GPT-5 to evaluate credibility, logical soundness, and contextual relevance—acting as a safeguard against misinformation. By integrating GPT-5 with hybrid retrieval (FAISS + BM25), we ensure precise context delivery while minimizing token usage. This efficient workflow solves a real-world problem: enabling faster, more reliable, and scalable knowledge discovery.

24 Aug 2025

Sarah

Sara is an AI‑powered personal agent built on the Llama‑3.3‑70b‑versatile model , Groq API and Open AI Agents SDK as the agentic framework. At its core sits a Main Agent that orchestrates three specialized sub‑agents—Food, Travel, and Shopping—each equipped with its own suite of tools. When you ask Sara for dinner recommendations, the Food Agent taps into its own; for flights, the Travel Agent queries its own tool; for deals, the Shopping Agent searches major e‑commerce platforms. The frontend is implemented in Next.js, The backend leverages FastAPI and For AI part Groq API , Llama and Open AI agents SDK. Currently, Sara exists as a full stack feature natural conversation in text and voice mood, also agents hands off. While tool integration, and neo4j database integration are pending.Despite these pending integrations, the prototype already demonstrates the core conversational flows, multi‑agent orchestration,

8 Jul 2025

.png&w=640&q=75)

.png&w=640&q=75)

.png&w=640&q=75)