Top Builders

Explore the top contributors showcasing the highest number of app submissions within our community.

Vultr

Vultr is a leading high-performance cloud computing provider that offers a wide range of services, including scalable GPU instances specifically tailored for demanding AI and robotics workloads. Known for its global network, competitive pricing, and robust infrastructure, Vultr enables developers and businesses to deploy and manage powerful cloud resources with ease.

| General | |

|---|---|

| Author | Vultr LLC |

| Release Date | 2014 |

| Website | https://www.vultr.com/ |

| Documentation | https://docs.vultr.com/ |

| Technology Type | Cloud Computing Provider |

Key Features

- Global Network: Access to high-performance data centers worldwide for low-latency deployments.

- Scalable GPU Instances: Offers powerful NVIDIA GPUs for AI, machine learning, and high-performance computing tasks.

- Flexible Cloud Servers: Provides various instance types, including bare metal, cloud compute, and dedicated cloud, to suit diverse needs.

- Custom ISO Support: Allows users to deploy custom operating systems or applications.

- API and CLI Access: Programmatic control over all cloud resources for automation.

- Managed Kubernetes: Simplified deployment and management of containerized applications.

Start Building with Vultr

Vultr provides an ideal platform for deploying AI and robotics projects that require significant computational power. With its high-performance GPU instances and global infrastructure, developers can train complex models, run simulations, and host AI-powered applications efficiently. Explore their documentation to get started with deploying your next-generation AI solutions.

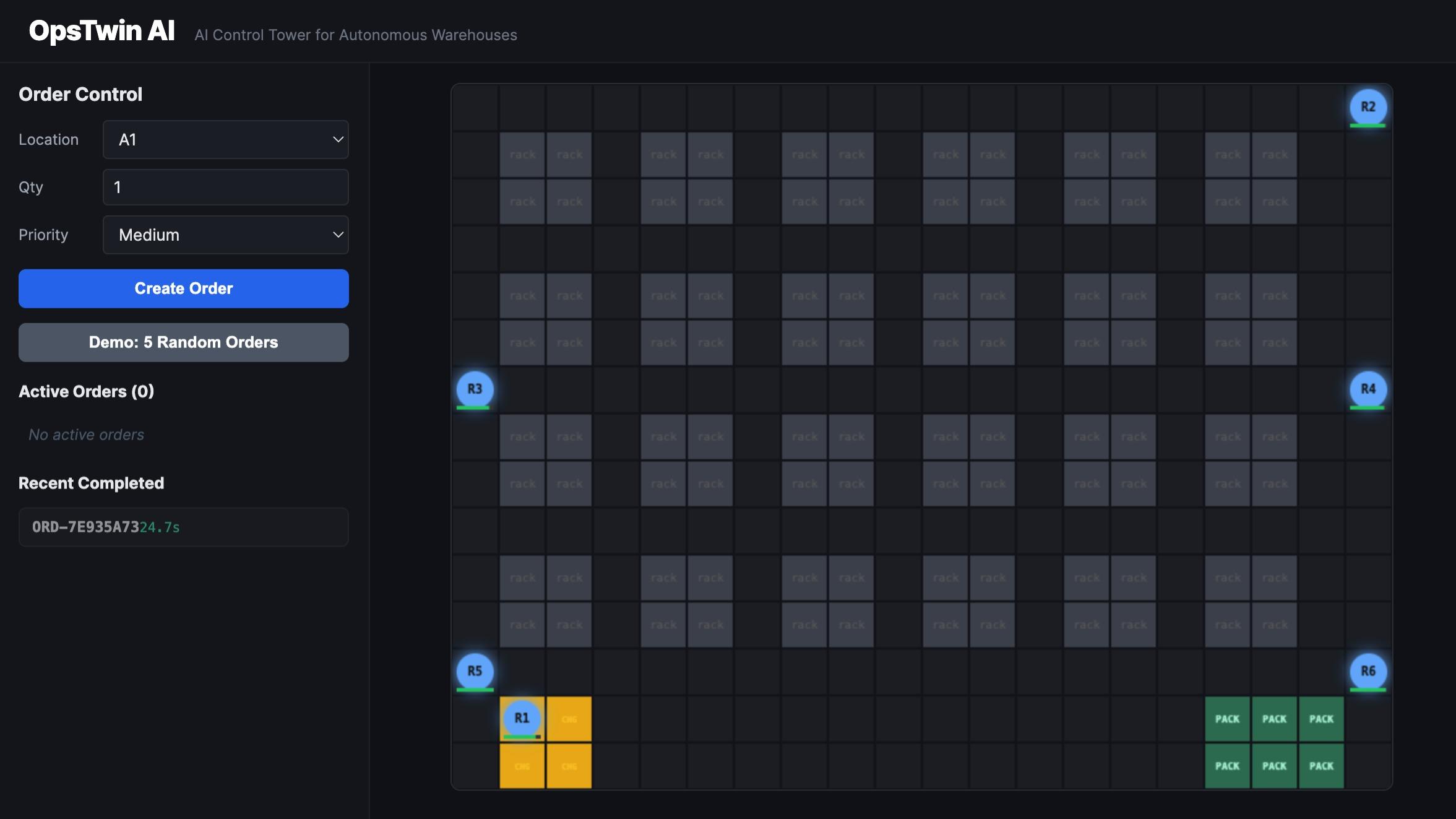

VULTR AI Technologies Hackathon projects

Discover innovative solutions crafted with VULTR AI Technologies, developed by our community members during our engaging hackathons.