Top Builders

Explore the top contributors showcasing the highest number of app submissions within our community.

Kiro

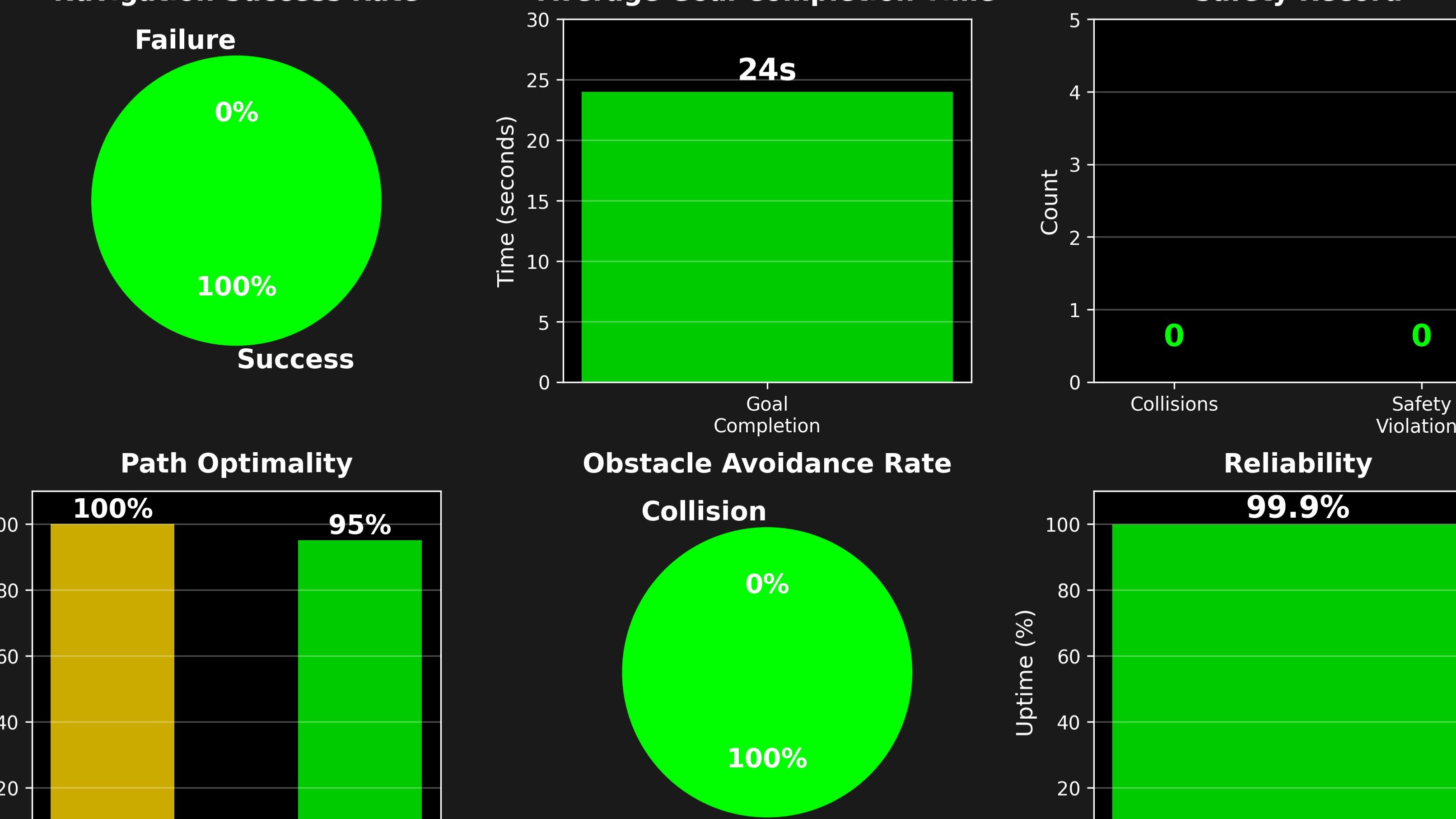

Kiro is an AWS-powered agentic coding service designed to revolutionize software development through "spec-driven development." It leverages artificial intelligence to interpret natural language prompts and automatically generate code and tests, significantly accelerating the development lifecycle. Kiro aims to reduce manual coding efforts and improve code quality by ensuring that applications adhere closely to their specifications.

| General | |

|---|---|

| Author | AWS |

| Release Date | 2025 |

| Website | https://aws.amazon.com/ |

| Documentation | https://aws.amazon.com/documentation-overview/kiro/ |

| Technology Type | Agentic IDE |

Key Features

- Spec-Driven Development: Translates high-level natural language specifications into functional code and comprehensive tests.

- AI-Powered Code Generation: Utilizes advanced AI models to write code automatically, reducing development time.

- Automated Test Creation: Generates relevant test cases alongside code, ensuring immediate validation and higher quality.

- AWS Integration: Seamlessly integrates with the broader AWS ecosystem, leveraging cloud infrastructure for scalable development.

- Agentic Workflow: Employs AI agents to manage and execute development tasks, from planning to implementation.

Start Building with Kiro

Kiro offers an innovative approach to software development, allowing teams to rapidly build and test applications by focusing on specifications rather than intricate coding details. As an AWS-powered service, it provides the scalability and reliability expected from a leading cloud provider. Developers interested in leveraging AI for accelerated and more reliable coding should explore Kiro's capabilities.

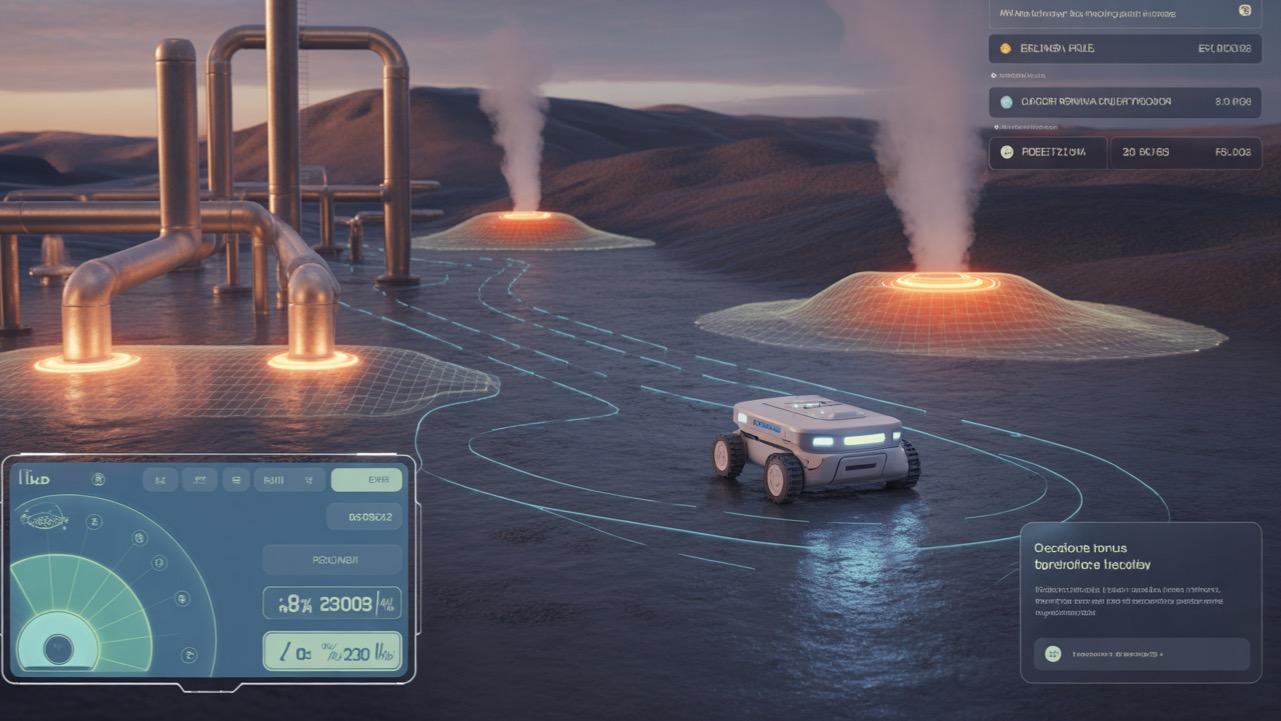

AWS kiro AI technology Hackathon projects

Discover innovative solutions crafted with AWS kiro AI technology, developed by our community members during our engaging hackathons.